Cerebras wafer size chips faster than real time? CS-1 can outperform one of the fastest supercomputers in the US by more than 200 X. Cerebras CS1 is the fastest and largest chip.

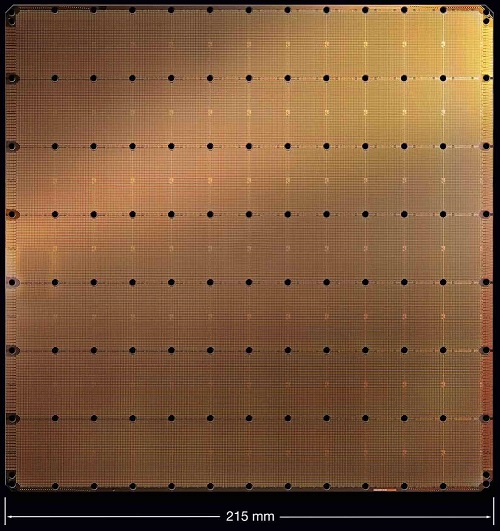

Cerebras is a computer systems company dedicated to accelerating deep learning. The pioneering Wafer-Scale Engine (WSE) – the largest chip ever built – is at the heart of deep learning system, the Cerebras CS-1.

56x larger than any other chip, the WSE delivers more compute, more memory, and more communication bandwidth. This enables AI research at previously impossible speeds and scale.

The Cerebras Wafer Scale Engine is 46,225 mm2 with 1.2 Trillion transistors and 400,000 AI-optimized cores.

By comparison, the largest Graphics Processing Unit is 815 mm2 and has 21.1 Billion transistors. In Aug 2020, Lawrence Livermore National Laboratory (LLNL) and AI company Cerebras Systems today announced the integration of the 1.2-trillion Cerebras’ Wafer Scale Engine (WSE) chip into the National Nuclear Security Administration’s (NNSA) 23-petaflop Lassen supercomputer.

[monsterinsights_popular_posts_inline]

************************************************************

Subscribe to our newsletter for latest updates:

************************************************************

Outperform one of the fastest supercomputers in the US by more than 200 X

In collaborations with researchers at the National Energy Technology Laboratory (NETL), Cerebras showed that a single wafer-scale Cerebras CS-1 can outperform one of the fastest supercomputers in the US by more than 200 X. The problem was to solve a large, sparse, structured system of linear equations of the sort that arises in modeling physical phenomena—like fluid dynamics—using a finite-volume method on a regular three-dimensional mesh. Solving these equations is fundamental to such efforts as forecasting the weather; finding the best shape for an airplane’s wing; predicting the temperatures and the radiation levels in a nuclear power plant; modeling combustion in a coal-burning power plant; and or making pictures of the layers of sedimentary rock in places likely to contain oil and gas.

Three key factors enabled the successful speedup:

- The memory performance on the CS-1.

- The high bandwidth and low latency of the CS-1’s on-wafer communication fabric.

- A processor architecture optimized for high bandwidth computing.

Cerebras will be presenting this at supercomputing conference SC20. Cerebras blog post says. For certain classes of supercomputing problems, wafer-scale computation overcomes current barriers to enable real-time and faster than real-time performance, and other applications that may otherwise be precluded by the failure of strong scaling in current high-performance computing systems.

Cerebras has built the world’s largest chip. It is 72 square inches (462 cm2) and the largest square that can be cut from a 300 mm wafer.

Cerebras CS1 fastest chip is about 60 times the size of a large conventional chip like a CPU or GPU. It was built to provide a much-needed breakthrough in computer performance for deep learning. Cerebras says their systems are providing an otherwise impossible speed boost to leading-edge AI applications in fields ranging from drug design to astronomy, particle physics to supply chain optimization, to name just a few applications.

Faster than Real-Time

The wafer scale CS-1 is the first ever system to demonstrate sufficient performance to simulate over a million fluid cells faster than real time. This means that when the CS-1 is used to simulate a power plant based on data about its present operating conditions, it can tell you what is going to happen in the future faster than the laws of physics produce that same result. (Of course, the CS-1 consumes power to do this, whereas the powerplant generates power.)

Faster than real-time model-based control is one of many exciting new applications that becomes possible with this kind of performance. Beyond a threshold, speed is not just less time to a result, but provides whole new operating paradigms.

To bridge the gap from a linear solver to fluid dynamics, we need to consider the full application. At the outer-most level, several iterations are performed to choose the largest stable simulation time increment. Each iteration uses the Semi-Implicit Method for Pressure Linked Equations (SIMPLE). This is the algorithm that solves Navier-Stokes by generating systems of equations at each time step. SIMPLE constructs three velocity linear systems and one pressure linear system for each phase of material (e.g., fuel and air). BiCGSTAB iterates for each linear system until it converges.

All steps used in constructing the systems of equations are also local vector operations. We make the conservative assumption that this takes 50% of total run time.

Wafer-Scale Supercomputer

Cerebras CS1 fastest chip is the world’s first wafer-scale computer system. It is 26 inches tall and fits in a standard data center rack. It is powered by a single Cerebras wafer (Figure 1). All processing, memory, and core-to-core communication occur on the wafer. The wafer holds almost 400,000 individual processor cores, each with its own private memory, and each with a network router. The cores form a square mesh. Each router connects to the routers of the four nearest cores in the mesh. The cores share nothing; they communicate via messages sent through the mesh.

The Architecture of the Processor

The Cerebras CS-1 is powered by a single wafer we call the Wafer Scale Engine (WSE). The WSE contains 400,000 independent cores each with its own memory. These cores are small, powerful, and particularly efficient at accessing their own memory and communicating with neighboring cores.